As voters in the recent New Hampshire primary have found, a fake robocall of President Joe Biden has been making the rounds. Using AI voice-cloning technologies, the bogus message urges Democratic voters to stay home and “… save your vote for the November election.”

The phony message further went on to say, “Your vote makes a difference in November, not this Tuesday.”

NBC News first reported the storyi, and the New Hampshire Attorney General’s office has since launched an investigation into what it calls an apparent “unlawful attempt to disrupt the New Hampshire Presidential Primary Election and to suppress New Hampshire votersii.”

This is just one of the many AI voice-clone attacks we’ll see this year. Not only in the U.S., but worldwide, as crucial elections are held around the globe.

Indeed, billions of people will cast their votes this year, and the rise of AI technologies begs something important from all of us — everyone must be a skeptic.

With AI tools making voice clones, video and photo deepfakes, and other forms of disinformation so easy to create, people should be on guard. Put simply, we need to run the content we see and hear through our own personal lie detectors.

Your own AI lie detector — the quick questions that can help you spot a fake.

A couple of things make it tough to spot a fake, as AI tools create content that appears more and more convincing.

First, our online lives operate at high speed. We’re busy, and a lot of content zips across our screens each day. If something looks or sounds just legit enough, we might assume it’s authentic without questioning it.

Second, we encounter a high volume of content that results in big emotions, making us less critical of what we see and hear. When fake content riles us up with anger or outrage, we might react, rather than follow up and learn if it’s true or not.

That’s where your personal lie detector comes in. Take a moment. Pause. And ask yourself a few questions.

What kind of questions? Common Sense Media offers several that can help you sniff out what’s likely real and what’s likely false. As you read articles, watch videos, and or receive that robocall, you can ask yourself:

- Who made this?

- Who is the target audience?

- Does someone profit from it?

- Who paid for this content?

- Who might benefit from or be harmed by this message?

- What important info is left out of the message?

- Is this credible? Why or why not?”

Answering only a few of them can help you spot a scam or a piece of disinformation. Or at least get a sense that a scam or disinformation might be afoot. Let’s use the President Biden robocall as an example. Asking only three questions tells you a lot.

First, “Is this credible?”

In the call, the phony message from the President asks voters to “… save your vote for the November election.” Would the leader of the world’s largest democracy truly ask you not to vote in an election? Not to exercise a basic right? No. That unlikelihood marks a strong indication of a fake.

Second, “Who might benefit from or be harmed by this message?”

This question takes a little more digging to answer. Because the Democratic party shifted its first Presidential primary election from New Hampshire to South Carolina this year, local supporters have launched a grassroots effort. Its intent is to encourage voters to write in Joe Biden on their Tuesday ballot to show support for their favored candidate. The disinformation contained in the AI clone robocall could undermine such efforts, marking yet another strong indication of a fake.

Lastly, “what important info is left out of the message?

How does “saving your vote” for another election help a candidate? The message fails to explain why. That’s because it doesn’t help. You have a vote in every election. There’s no saving your vote. This further raises a major red flag.

While these questions didn’t give definitive answers, they certainly call plenty of components of the audio into question. Everything about this robocall sounds like a piece of disinformation, once you ask yourself a few quick questions and run the answers through your own internal lie detector.

You have the tools to spot a fake – and soon you’ll have even more.

With the political stakes so particularly high this year, expect to see more of these disinformation campaigns worldwide. We predict that more bad actors will use AI tools to make candidates say things they never said, give people incorrect polling info, and generate articles that mislead people on any number of topics and issues.

Expect to use your lie detector. By slowing down and asking some of those “Common Sense” questions, you can uncover plenty.

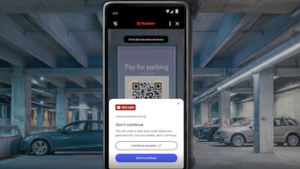

Also, take comfort in knowing that we’re developing technologies that detect AI fakes, like our Project Mockingbird for AI-generated audio. Moreover, we’re working on technologies for image detection, video detection, and text detection as well. We want to make spotting a fake far easier than it is, something you can do in seconds. Like having an AI lie detector in your back pocket.

Between those technologies and your own common sense, you’ll have powerful tools to know what’s real and what’s fake out there.